VIBE Coding with Cursor: Building a Full-Stack Gen-AI App with LLaVA, OpenAI, and MCP

A Product Leader’s Journey from Zero to Full-Stack using Cursor IDE, LLaVA Image Analysis, OpenAI, and the Model Context Protocol (MCP)

Introduction: A 20-Year Hiatus Ends in the Age of Gen-AI

After nearly two decades away from hands-on coding, I jumped headfirst into the world of full-stack development. As a systems software engineer by background, I'd never actually built a complete web application—front-end to back-end. The rise of Generative AI, however, was too compelling to remain on the sidelines. What made this transition feasible? The magic of Cursor IDE and the architectural clarity provided by Model Context Protocol (MCP).

Read my article “The Prompt is The Product” for a high-level positioning of MCP

This article is a reflection on my journey building an end-to-end Generative AI application that accepts an image from a browser, routes it to an image analysis model (LLaVA) via a custom MCP server, and returns insightful responses through OpenAI’s LLM. It also captures why Cursor IDE might just be the ultimate playground for AI-native app builders.

1. The Stack: Gen-AI Meets Engineering Elegance

Frontend: HTML page served via a lightweight HTTP server with input for image upload

Cursor IDE: AI-native IDE that speeds up development by automating repetitive tasks and suggesting accurate context-aware code

Backend Server: Python FastAPI-based MCP server

LLM: OpenAI for text generation

Vision Model: LLaVA for image understanding

Protocol: MCP (Model Context Protocol) for coordinating between chat, models, and tools

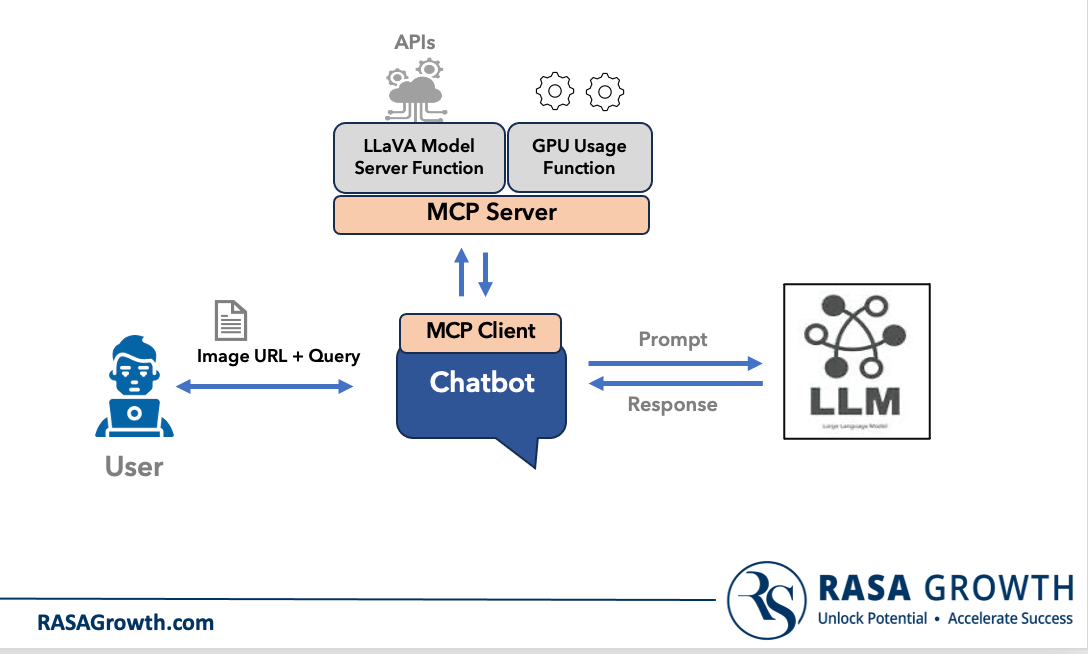

2. MCP Architecture: Orchestrating Intelligence

The application uses a custom-built MCP server to route function calls and search queries to the appropriate handlers. Here's a visual of the architecture:

Key Components:

User: Initiates the prompt via a chatbot UI

Chatbot: Sends the input to the MCP Client

MCP Client: Forwards the request to the MCP Server

MCP Server:

Dispatches calls to function modules (like LLaVA image analysis, etc.) and returns the results to the Chatbot for further analysis/reasoning with LLM

LLM: Returns the final response for the chatbot to present

Below is a high-level view of the architecture.

3. LLaVA Integration: Custom Image Analysis Pipeline

The new_llava_mcp_server.py is a standout module. It defines the Llava model as a callable function within the MCP framework, optimized for performance and speed.

Unique features include:

Quantization Support: Reduces memory footprint

Image Compression: Automatically reduces image size to enhance upload and inference speed

Model Initialization at Launch: Pre-warms the model to avoid startup latency

This design ensures the image is processed quickly, sent through Llava, and its response seamlessly integrated into the LLM conversation flow.

4. Cursor IDE: Coding at the Speed of Thought

What truly empowered me to ship a working full-stack AI application in a matter of days was Cursor:

Inline AI pair programmer that understands the context of each file

Multi-file awareness: Seamlessly navigates interconnected components like HTML, Python backend, and client logic

Function-aware autocompletion: MVP-ready prototypes in hours, not weeks

Cursor made it feel like the IDE was my engineering partner, not just a tool.

5. Browser Output: From Upload to Insight

The user simply enters the URL to an image (Image used in the screenshot above) and a query regarding the image. Behind the scenes, the image is sent to the MCP Client, routed to LLaVA, and the interpreted caption is passed to OpenAI’s LLM for final synthesis of the query. The result? An insightful, conversational output that captures both vision and language understanding.

6. Lessons and Reflections

Cursor IDE flattened the learning curve, enabling a 20-year coding hiatus to end with confidence

MCP Server provided modularity and extensibility

Gen-AI Models like OpenAI’s GPT-4 and LLaVA unlock unprecedented capabilities when orchestrated well

Acknowledgments

Gratitude to the instructors and mentors at Support Vectors, where I currently serve as a Strategic Growth Advisor. Their rigorous coursework gave me both the grounding and motivation to build something meaningful from the ground up. Send me a DM for access to the full code on Github.

Closing Thoughts

This is just the beginning. If I can ship a full-stack Gen-AI app after two decades away from code, imagine what an AI-native future looks like for product leaders, startup founders, and engineering teams. With tools like Cursor and frameworks like MCP, the future isn’t just built—it’s co-created with AI.