The Prompt is the Product

The Evolution of LLM Interaction and Intelligent System Design

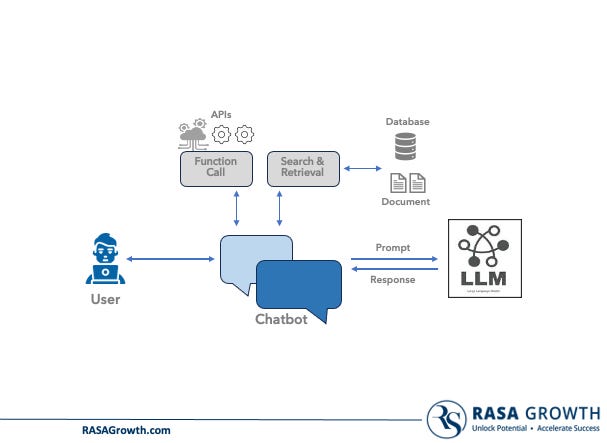

We are entering a transformative era in software design and interaction. At the core of this shift are large language models (LLMs)—versatile systems that can reason, generate, and take action through natural language. While much attention is given to model architecture and capabilities, there is one critical element often operates in the background yet shapes the entire experience: The Prompt.

.

Every interaction with an LLM is still a prompt

.

Prompts have evolved from static examples to dynamic, multi-layered orchestrators of system behavior. Today, they not only define what the model says but how it thinks, integrates external tools, retrieves domain knowledge, and navigates complex tasks.

This article traces the evolution of prompts as an interface layer—from intuitive hacks to structured, programmable, and protocol-driven elements of intelligent architecture. We explore how prompting has matured into an engineering discipline that now underpins tools, agents, retrieval systems, and protocols—and where it's heading next.

1. Language as Interface: The Foundational Shift

With early LLMs like GPT-2, developers discovered something powerful—natural language could serve as a universal control interface. It was flexible and required no formal syntax. But it was also brittle, with high variability in outcomes.

This moment seeded the idea that language itself could abstract computational complexity.

Enabled new users to interact with AI systems

Reduced need for GUIs or rigid APIs

Introduced a new layer of software abstraction

.

Prompts are not just inputs—they are contracts between human intent and machine reasoning

.

2. Prompt Engineering: From Intuition to Architecture

As LLM usage scaled, developers began experimenting with systematic prompt composition techniques to increase consistency and accuracy. This gave rise to prompt engineering—a skill set blending writing, logic, and experimentation, and introduced foundational patterns:

Few-shot prompting: Provide examples in the prompt to teach the model.

Role-based prompting: Frame the model’s behavior (e.g., “You are a legal advisor”).

Prompt templates: Enable reusability across tasks or domains.

These early patterns revealed that prompt quality had system-level implications—making prompt engineering as critical as code. This approach also had limits. It was hand-crafted, hard to scale, and required deep intuition of model behavior.

.

Prompting evolved from clever phrasing to system-level architectural planning

.

📖 Research Reference: Brown et al. (2020), Language Models are Few-Shot Learners (arXiv:2005.14165)

3. From Prompt Strings to Structured Interfaces

As developers integrated LLMs into production systems, the need for reliability and precision pushed prompting beyond plain text. New requirements emerged:

Parameterized inputs: Dynamic data-driven prompts

Context-aware adaptivity: Modify prompts based on user/session

Type-safe outputs: Ensure predictable, structured responses

Tool invocation: LLMs suggesting external function calls

This shift laid the groundwork for structured prompts and programmatic interfaces.

.

Structured prompts bridge language and logic—enabling modular integration with software systems

.

3.1 JSON Schema and Pydantic: Enforcing Structure

Developers began using JSON Schema and Pydantic models to define the exact format of desired responses. This allowed systems to validate LLM outputs before triggering downstream actions.

Output type safety

Validation before execution

Secure system integration

JSON Schema

{

"name": "create_invoice",

"parameters": {

"type": "object",

"properties": {

"customer_name": {"type": "string"},

"email": {"type": "string", "format": "email"},

"amount": {"type": "number"},

"due_date": {"type": "string", "format": "date"}

},

"required": ["customer_name", "email", "amount"]

}

}Pydantic Model

class Invoice(BaseModel):

customer_name: str

email: EmailStr

amount: float

due_date: date.

Structured prompting enables LLMs to become type-safe interfaces between natural language and backend execution layers

.

3.2 Function Calling : Orchestrated Execution

In 2023, OpenAI, Anthropic, and others introduced function calling. Function calling allowed LLMs to output structured JSON objects, not just answers, specifying:

The function to call

The arguments to pass

{

"function_call": {

"name": "get_weather",

"arguments": { "location": "San Francisco" }

}

}For an LLM, that is trained for functional calling, to be able to respond with the above response the original prompt should include a list of all available functions as part of the prompt. Here is an example of components of the prompt.

System Message Prompt

{

"role": "system",

"content": "You are a helpful assistant that can call functions when needed to answer user questions. Use the function call format if the task requires external data."

}

User Message

{

"role": "user",

"content": "What’s the weather like today in San Francisco?"

}

Available Functions (Provided to LLM)

[

{

"name": "get_weather",

"description": "Get the current weather for a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco"

}

},

"required": ["location"]

}

},

{

"name": "get_time",

"description": "Get the current local time for a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and country"

}

},

"required": ["location"]

}

}

]

It is critical to note that

LLMs generate structured plans, not executable code

LLMs produce function call suggestions that client applications must parse, execute, and return the results from—enabling the LLM to continue reasoning with updated inputs. This establishes a new control flow where prompts define actionable intent.

.

Function calling decouples reasoning from execution—enabling modular, agent-like task orchestration

.

📖 Learn More: OpenAI Function Calling Guide

4. Retrieval-Augmented Generation (RAG): Grounding Prompts in Knowledge

RAG systems emerged to address hallucination—LLMs generating plausible but false information. Instead of relying solely on model parameters, RAG injects relevant retrieved content into the prompt.

RAG Pipeline:

Embed user query

Search a vector database

Inject top-k results into the prompt

Ask the LLM to generate based on that context

System: You are a SaaS analyst. Use only the provided context.

Context: ARR is $12.4M, churn is 3.2%.

User: How healthy is our SaaS business?.

RAG decouples knowledge from reasoning, enabling dynamic context injection into prompts

.

📖 Research Reference: Lewis et al. (2020), Retrieval-Augmented Generation for Knowledge-Intensive NLP (arXiv:2005.11401)

5. AI Agents: Prompt Driver Orchestration

5.1 Single-Agent Reasoning

Agent frameworks like AutoGPT, LangChain, and CrewAI treat LLMs as reasoning cores. These agents build and manage prompts dynamically:

Receive a goal ("Draft a GTM strategy")

Plan subtasks

Call tools, APIs, or search engines

Interpret results

Refine and iterate until the task is complete

These agents loop through prompt cycles, using memory and logic to handle tasks autonomously.

.

Agents automate and sequence prompt construction, execution, and evaluation

.

📄 Research Paper: ReAct: Reasoning and Acting in Language Models (arXiv:2210.03629)

5.2 Multi-Agent Collaboration

Multi-agent systems assign specialized roles:

Retriever Agent: Finds knowledge

Planner Agent: Sets strategy

Tool Agent: Executes functions

Critique Agent: Validates results

They pass prompts and results to each other, coordinating via structured interaction protocols —mimicking organizational behavior.

.

MAS architectures bring modularity, resilience, and team-like reasoning to LLM workflows

.

Research Reference: Liu et al. (2023), ChatDev: Revolutionizing LLM-based Software Development via Multi-Agent Collaboration (arXiv:2307.07924)

6. Toward Protocols: The Rise of MCP and Standardization

As LLM ecosystems grow, the need for interoperability has led to proposals like the Model Context Protocol (MCP) introduced by Anthropic. These define shared standards for:

Prompt syntax and schema

Tool registration and usage

Memory management across sessions

This is similar to early web evolution: from raw HTTP to REST APIs to service meshes.

.

MCP moves prompting from an ad-hoc practice to a a protocol-first system design model

.

📖 Explore: MCP Proposal Overview

What’s Next: Prompting in the Post-Interface Era

Looking forward, several trends are emerging to reshape LLM interactions:

Multi-modal prompts: Combining text, voice, images, and code

Embedded ethics and guardrails: Defining rules within prompts

Long-term memory: Persistent context across sessions

Autonomous agents: Zero-shot orchestration of tools and tasks

.

Prompts will define not just interactions—but the architecture of cognition, execution, and ethics

.

Conclusion: The Rise of Prompt-Driven Architecture

The evolution of prompts mirrors the broader evolution of software itself—from handcrafted to composable, from intuitive to engineered, from informal to protocol-based. Prompts are no longer static inputs; they are programmable contracts, strategic levers, and orchestrators of intelligent behavior.

This transformation holds significant implications for business leaders, product managers, and engineers alike. Just as APIs revolutionized the internet and microservices transformed distributed systems, prompts are ushering in a new era of cognitive interfaces—a world where business logic, product workflows, and system capabilities can all be expressed and executed through structured natural language.

What began as an informal language hack has become the most important interface paradigm of the AI era. Prompts are now:

Structured (JSON schemas)

Composable (via agents)

Grounded (via RAG)

Executable (via function calling)

Standardized (via protocols)

As we design systems that are increasingly autonomous, dynamic, and adaptive, the role of prompts will only grow in importance. Prompts are the language of collaboration between humans and machines, and the new interface layer of intelligent software systems.

.

The future belongs to those who don’t just prompt better — but build prompt-driven architectures that are scalable, composable, and trusted

.

To lead in this next wave, architects and technologists must treat prompting as software architecture—with all the modularity, testability, and system integration that entails.

The future of AI will be built not just with code, but with carefully designed, orchestrated prompts.